Spark Dataframe Remove Non Printable Characters Python Def cleanRows mytabledata DataFrame RDD String this will do the work for a specific column myString1 of type string val oneColumn clean mytabledata withColumn myString1 regexp replace col myString1 return type can be RDD or Dataframe

Spark SQL function regex replace can be used to remove special characters from a string column in Spark DataFrame Depends on the definition of special characters the regular expressions can vary For instance 0 9a zA Z can be used to match characters that are not alphanumeric or The character as you see is I wrote the following code to remove this from the description column of data frame from pyspark sql functions import udf charReplace udf lambda x x replace train cleaned train triLabel withColumn dsescription charReplace description

Spark Dataframe Remove Non Printable Characters Python

![]() Spark Dataframe Remove Non Printable Characters Python

Spark Dataframe Remove Non Printable Characters Python

https://sgp1.digitaloceanspaces.com/ffh-space-01/9to5answer/uploads/post/avatar/649124/template_remove-non-printable-utf8-characters-except-controlchars-from-string20220701-1656870-1qhcmfi.jpg

If you want to remove the rows with special characters then this might help select and then merge rows with special characters print df df label str contains r 0 9a zA Z drop the rows print df drop df df label str contains r 0 9a zA Z index

Templates are pre-designed files or files that can be utilized for numerous purposes. They can conserve effort and time by offering a ready-made format and design for developing various sort of material. Templates can be utilized for personal or professional tasks, such as resumes, invites, flyers, newsletters, reports, discussions, and more.

Spark Dataframe Remove Non Printable Characters Python

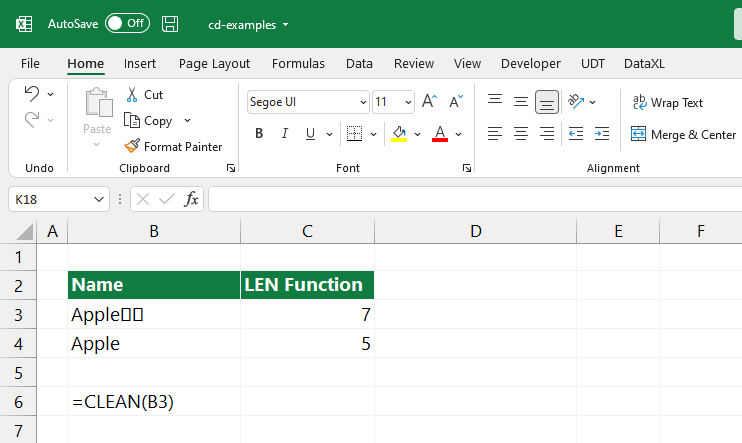

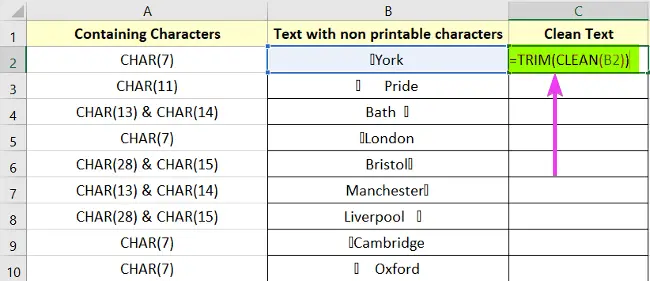

15 Ways To Clean Data In Excel ExcelKid

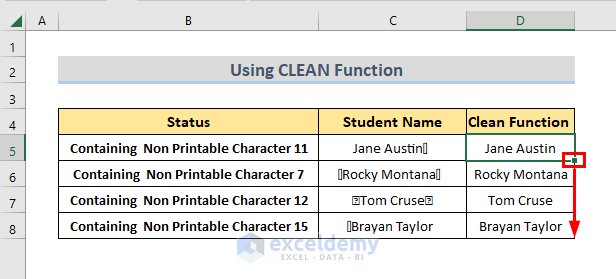

Remove Non Printable Characters In Excel 5 Methods

Remove Non Ascii Characters Python Python Program To Remove Any Non

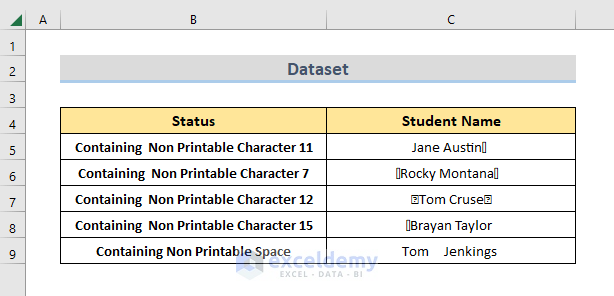

How To Remove Non Printable Characters In Excel 4 Easy Ways

How To Remove Non Printable Characters In Excel 4 Easy Ways

Python String Remove Non ASCII Characters From String Cocyer

https://stackoverflow.com/questions/49388260

How to delete non printable character in rdd using pyspark Need to remove non printable characters from rdd sqlContext read option sep option encoding ISO 8859 1 option mode PERMISSIVE csv rdd map lambda s s replace xe2

https://stackoverflow.com/questions/66141218

I want to delete the last two characters from values in a column The values of the PySpark dataframe look like this 1000 0 1250 0 3000 0 and they should look like this 1000 1250 3000

https://stackoverflow.com/questions/62769007

Only the non printable non ascii characters need to be removed using regex Example something similar to select regexp replace col print ctrl OR select regexp replace col alphanum But I can t get it to work in Spark SQL with the SQL API Can anyone please advise with a working example

https://www.statology.org/pyspark-remove-special-characters

You can use the following syntax to remove special characters from a column in a PySpark DataFrame from pyspark sql functions import remove all special characters from each string in team column df new df withColumn team regexp replace team a zA Z0 9 The following example shows how to use this

https://stackoverflow.com/questions/92438

An elegant pythonic solution to stripping non printable characters from a string in python is to use the isprintable string method together with a generator expression or list comprehension depending on the use case ie size of the

You can use DataFrame fillna to replace null values Replace null values alias for na fill DataFrame fillna and DataFrameNaFunctions fill are aliases of each other Parameters value int long float string or dict Value to replace null values with If the value is a dict then subset is ignored and value must be a mapping from Procedure to Remove Blank Strings from a Spark Dataframe using Python To remove blank strings from a Spark DataFrame follow these steps To load data into a Spark dataframe one can use the spark read csv method or create an RDD and then convert it to a dataframe using the toDF method

Removing non ascii and special character in pyspark RohiniMathur New Contributor II Options 09 23 2019 12 16 AM i am running spark 2 4 4 with python 2 7 and IDE is pycharm The Input file csv contain encoded value in some column like given below File data looks COL1 COL2 COL3 COL4